Table of Contents

Home / Blog / AI/ML

Deep Learning Models Explained with Types and Real World Uses

July 9, 2025

July 9, 2025

The brain is considered to be the most powerful computer, a working mechanism, which is able to concurrently execute complicated tasks that even sophisticated artificial intelligence cannot approach. This ability has led to the birth of deep learning, a paradigm that tries to imitate the brain in its processing of information.

This article will break down the definition of deep learning, discuss its real-life uses and applications, and focus on common deep learning models and the benefits and shortcomings of this developing technology. We are also going to point out some major differences between deep learning and machine learning, touching on the broader AI vs machine learning distinction.

So we will start with the main question: What is deep learning?

Understanding Deep Learning: The Essentials

Deep learning forms a subdivision of machine learning and is based on multilayered frameworks referred to as deep neural networks, utilizing advanced deep learning model training. These networks are intended to simulate the complex decision making process of a human brain. Central to this method is the artificial neural network which is a system composed of layers of interconnected processing elements, or neurons, which mutually process and learn data patterns.

These networks, inspired by how people think and process information using their brains, can handle a broad selection of tasks and represent sophisticated machine learning techniques. They are especially effective in areas such as autonomous driving, medical diagnosis, and financial fraud detection.

How Does Deep Learning Work?

Deep learning refers to the subset of machine learning and is identical to how the human brain processes information; however, it is entirely based on algorithms, data, and organized layers. Its basic unit is the neural networks, composed of sets of interconnected units (known as neurons or nodes) that are trained to identify patterns given the presence of a massive volume of data.

All neural networks consist of a few layers: an input layer where raw data (such as audio, images, or text) is fed in, a series of hidden layers that manipulate and process the data and an output layer that returns a final decision, e.g. the object in an image or the translation of a sentence. When data is passed sequentially through the input to the output, the network learns by adapting the internal parameters (weights and biases) to attain a better performance and this learning process is called forward propagation, one of many deep learning techniques used to optimize performance.

These systems improve through

These systems should learn to become smarter in the course of time through another process, which is called backpropagation. This is a method that assesses the degree to which the model produced was close to the correct response and consequently adjusts the internal parameters to reduce future mistakes. These adjustments are efficiently performed layer by layer, working backwards from the output, by algorithms such as gradient descent. This approach is particularly relevant in supervised learning vs unsupervised learning contexts, where labeled datasets (e.g., handwritten digits) enable precise error correction.

As an example, in training a model to read handwritten numbers, the system may learn simple features such as curves and edges in the initial layers. As information is pushed further in the network, it learns increasingly complicated patterns and eventually becomes able to recognize the number with great accuracy.

Deep learning models are trained on large computational power. That is why often developers use special hardware, like powerful GPUs that can run thousands of operations in parallel. Training the models with many GPUs locally can be expensive and require a lot of resources, which is why many developers use cloud-based solutions. Frameworks like Keras, MXNet, and CNTK are commonly used to build and train these models due to their modular design, speed, and support for deep learning operations.

Transform Data Into Deep Learning Superpowers!

Unlock AI-driven insights, predictive analytics, and automated decisions. Debut Infotech transforms your data into profit-driving intelligence.

Types of Deep Learning Models

Deep learning models are such effective tools which are able to draw meaningful patterns out of data automatically. This feature means they are particularly useful in fields such as medical imaging, deep learning in predictive analytics, and language specific applications. Convolutional neural networks (CNNs), feedforward neural networks (FNNs), and recurrent neural networks (RNNs) are common deep learning types.

Convolutional Neural Networks (CNNs) are very effective systems when working with visual data. They are built to recognize spatial hierarchies in images, and therefore suited to tasks including facial recognition, traffic sign detection, and study of satellite imagery. CNNs are one of the fundamental types of deep learning models for spatial pattern recognition.

Feedforward Neural Networks (FNNs) are the simplest form of artificial neural networks. Having a direct flow of information along the input and output, they find wide application in various tasks, among which fraud detection, handwriting recognition and sentiment analysis.

Recurrent Neural Networks (RNNs) are specialized in the processing of sequential or time-based types of data. They can be used in real-time predictive models in areas like stock prices prediction, handwriting generation, and language modeling because of their capacity to remember information of previous procedures in a sequence.

Related Read: What is Deep Learning? A Beginner’s Guide for Business Leaders

Machine Learning vs. Deep Learning

Machine and deep learning are two branches of artificial intelligence, although, in terms of complexity and scope, they are vastly different. Machine learning can be considered a more extensive term encompassing a variety of algorithms, which recognize trends, make predictions, and find ways to enhance performance as a result of experience. Deep learning by contrast is a specific branch of machine learning that makes use of deep neural networks, artificial neural networks that are patterned after the wiring of the human brain.

Depending on the approach, both machine learning and deep learning can be utilized on tasks with labeled or unlabeled data. Some of the popular areas where they are employed are in fraud detection, recommendation packages, and predictions and customer care automated sections and machine learning in business intelligence.

Deep learning is more likely to perform better than conventional machine learning when dealing with complex patterns of data and when dealing with large amounts of data. As an example, it is good at facial recognition, self driving, and real time language translation because it can automatically extract features and learn many layers of abstraction using sophisticated deep learning techniques.

Machine learning models, in contrast, can be trained more quickly, better at smaller datasets, can be more readily understood, and require fewer calculation resources, often leveraging efficient machine learning platforms. They are thus appropriate to solving problems such as email filtering, credit scoring, or predicting future sales trends.

Deep Learning in Real-World Applications

Natural Language Processing and Speech Recognition

Natural Language Processing (NLP) is an interdisciplinary subfield of linguistics and computer science, as well as artificial intelligence, which studies how computers can understand, interpret, and generate human language, both written and spoken. Some of the technologies it supports include chat assistants that are capable of conducting a conversation between parties, real-time translation applications that convert a language to another, content moderation systems that can identify inappropriate words or feelings.

Statistical NLP is a subset of NLP where algorithmic processing is mixed with machine learning and deep learning. The given method enables systems to process huge amounts of text and voice data, sit-in categories of information, and identify the likely meaning of words or phrases. More advanced architectures, such as Recurrent Neural Networks (RNNs) and transformer models, represent key types of deep learning that enable NLP systems to become better with time as they recognize patterns and context in unstructured data such as customer service transcripts as well as social media posts, reflecting key trends in NLP.

Automatic speech recognition (ASR), sometimes called speech recognition or speech-to-text is a technology that aims to convert speech to text. It is the technology which enables features such as voice dictation on smartphones, transcribing of virtual meetings and voice powered search. In comparison to voice recognition, which deals with recognizing who speaks, speech recognition is aimed at getting its meaning and translating it into written text.

Application Modernization

Deep learning remains a transforming way in which organizations handle the development of software and system update. On the one hand, generative AI is becoming a trusted asset in the context of application modernization and IT process automation (machine learning development services), facilitating the solution of the skills gap that continues to widen in tech teams.

The emergence of large language models (LLMs) and natural language processing (NLP) has led to AI systems being trained on large amounts of code (often a dataset of the collected code), and can now aid developers in effective ways. These models exploit the deep neural networks (deep learning techniques) to interpret and produce context-aware suggestions.

Writing code will no longer be necessary because developers will only need to explain what they want to achieve using plain English, after which AI tools will follow up with applicable code recommendations, including one-line or full one-block functions. This is not only helpful to reduce development time but it also largely gets rid of redundant coding jobs, which enables teams to work more on complex problem-solving. Either by using generative AI to refactor legacy systems or develop cloud-native architectures, the technology is increasingly becoming a feature of the new software production process.

Computer Vision

Computer vision is a branch of artificial intelligence (AI) that deals specifically with empowering computers to process and arrive at conclusions using visual information in a form of image and videos. It encompasses tasks like object recognition, image classification, and pixel-level understanding through semantic segmentation. Computer vision typically uses powerful neural networks and deep learning models to enable systems that can derive meaningful information out of visual data and make relevant decisions when any anomaly or pattern is identified.

In practice, the use of computer vision technologies is common to monitor equipment or streamline processes or guarantee quality control. These systems are able to analyze thousands of visual data per every minute, which could detect problems or abnormalities that might otherwise be imperceptible to humans. It has a wide-ranging effect across industries, in agriculture, the logistics sectors, healthcare, retail, and even automotive industries.

To function effectively, computer vision requires a substantial volume of data. The system is taught over time to recognize the difference between different visual patterns through repeated exposure and evaluation, often using supervised machine learning. These models are taught to recognize context and differentiate objects even without explicit guidance by the programmer. Given a sufficient number of examples, the system will learn to guess and classify images on its own by means of algorithmic learning.

Examples of Computer Vision in Action:

- Agriculture: When supplied with computer vision, drones examine crop health, identify diseases early, and use the most effective irrigation practices, providing farmers with such input to make informed decisions. This is a prime example of what deep learning is used for, enabling precise agricultural analytics.

- Healthcare: Advances in imaging devices that utilise AI can help a physician diagnose conditions like pneumonia or diabetic retinopathy faster and more accurately.

- Logistics: Computer vision is applied in warehouses to monitor the availability of products in real-time, ensure that they are placed correctly, and that the employees are safe.

- Retail and E-commerce: Visual search tools give customers the opportunity to share an image and locate related items, which strengthens how personalized shopping experiences become and makes shopping an easier process overall. Businesses implementing these tools often hire ML developers to optimize AI-driven features.

Generative AI

Generative AI (Gen AI ) is a form of artificial intelligence able to generate original content, such as text, images, audio, and others, on the basis of user prompting. It uses deep learning methods to identify patterns in available data and create realistic and relevant outputs.

It is commonly applied across industries such as healthcare, media, education and customer support, with greater application in unstructured data types, such as emails, audio files, and posts on social media. All these inputs enable Gen AI to enhance the automation process of digital assistance or report summarization.

In spite of moral and legal issues, a large number of organizations are integrating Gen AI to increase effectiveness, decrease expenses and scale up services, raising questions about responsible innovation and broader machine learning trends.

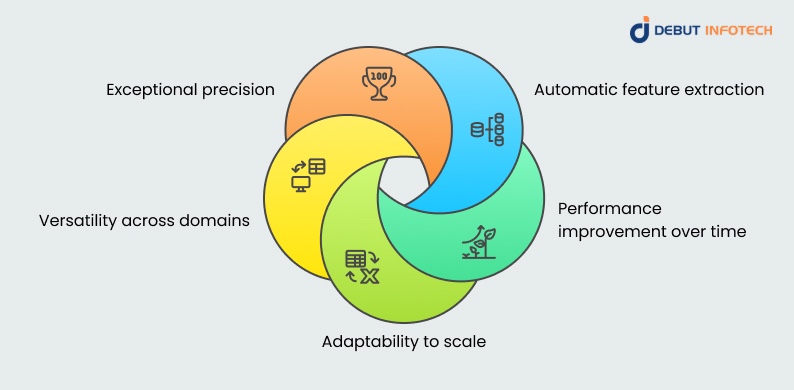

Benefits of Deep Learning

There are numerous advantages associated with deep learning that makes it an empowering technology in contemporary times. Some of these advantages include:

- Automatic feature extraction: These AI models do not necessarily require significant preprocessing of raw data prior to identification and learning meaningful underlying patterns or attributes.

- Performance improvement over time: The more data it has at its disposal, the more deep learning systems adjust and improve their precision, and they become increasingly efficient in each iteration.

- Adaptability to scale: These models are particularly good at dealing with large and complex datasets, which makes them suitable for applications such as recommendation engines, autonomous driving systems, and in real-time fraud detection.

- Versatility across domains: Deep learning can be applied across many diverse applications, including satellite imaging, medical anomaly detection, text generation and even translating spoken languages.

- Exceptional precision: More than having access to large datasets, deep learning systems can be more powerful than classic models used in machine learning platforms for visual recognition, speech interpretation, and sentiment analysis tasks with the right training.

Limitations of Deep Learning

Although deep learning has impressive possibilities, it has a range of significant disadvantages:

- Heavy Computational Demands: Training a deep learning algorithm often involves a costly amount of computational resources and access to high-performance technologies such as graphics processing units (GPUs) or tensor processing units (TPUs), which may increase the costs of the infrastructure.

- Overfitting Risks: Deep learning models are prone to overfitting, particularly in circumstances where the dataset is smaller or not very differentiated. This implies that the model achieves the highest accuracy when it is trained over the training data, but it performs badly when using new and unseen data sets which is a setback to its practical usefulness.

- Lack of Transparency: Deep learning systems are usually obscure. In highly sensitive areas such as healthcare or finance where accountability of decision making is a factor of consideration it is sometimes hard to track the outputs being generated.

- Limited Explainability: When decision-making is important to have a reasoning behind it, such as in criminal justice or autonomous driving, it may be difficult to explain why a deep learning model took a specific prediction or action. Such unintelligibility may impede trust and use.

These limitations underscore broader machine learning challenges in deploying complex AI systems.

Ready to Deploy Game-Changing Deep Learning?

Our experts build custom AI solutions for your goals, faster decisions, lower costs, scalable growth. Free strategy session.

Conclusion: Is It Worth Investing in Deep Learning Models?

Absolutely!

Whether It is powering an intelligent recommendation system or supporting advanced image and speech recognition, deep learning has changed the way modern businesses solve problems and lead innovation.

When you are new to the world of deep learning, some concepts will definitely feel complicated. But you do not need to travel on your own. The assistance of an experienced AI development company can guide you on how to settle on the appropriate deep learning modeling architectures in line with your specific objectives.

If you are looking to build stronger customer relationships using personalization, automate complicated processes, or secure your network using predictive analytics, you have a future-proof foundation with deep learning. Using the right strategy and skills the potential is enormous and the outcome can be game-changing.

Want to see what deep learning can offer to your business? Contact Debut Infotech and let us make smarter solutions together.

Frequently Asked Questions (FAQS)

A. Deep learning models function by self-learning from large datasets using deep learning technologies, eliminating the need for human involvement.

A. Deep learning, a branch of machine learning (developed by many machine learning development companies), uses multi-layered neural networks to analyze data and generate predictions or decisions. ChatGPT, for example, is powered by a deep learning technique known as a transformer-based neural network.

A. Deep learning, sometimes referred to as neural-based learning, occurs when artificial neural networks process and learn from vast amounts of data.

Talk With Our Expert

Our Latest Insights

USA

2102 Linden LN, Palatine, IL 60067

+1-703-537-5009

info@debutinfotech.com

UK

Debut Infotech Pvt Ltd

7 Pound Close, Yarnton, Oxfordshire, OX51QG

+44-770-304-0079

info@debutinfotech.com

Canada

Debut Infotech Pvt Ltd

326 Parkvale Drive, Kitchener, ON N2R1Y7

+1-703-537-5009

info@debutinfotech.com

INDIA

Debut Infotech Pvt Ltd

Plot #I-42, Sector 101-A, Alpha, IT City, Mohali, PB 160662

9888402396

info@debutinfotech.com

Leave a Comment